Introduction

The next generation of memory technologies, like Phase Change Memories (PCMs), will address gaps in today’s storage hierarchy, delivering data where it’s needed for real-time processing. PCM promises to bridge the gap between DRAM and flash in the data center. This project proposes to explore efficient computer algorithms and architecture to design and implement phase change memory (PCM) to meet the storage needs of consumers. PCM has significant shortcomings compared to Dynamic RAM (DRAM). To overcome these challenges, we will improve the write energy, reliability, and durability of phase change memories, which are a key enabling technology for non-volatile electrical data storage at the nanometer scale. In this project, problems will be addressed from both a hardware and software perspective. From a hardware perspective, all proposed solutions aim to improve the write energy of a PCM main memory system while addressing the limited endurance, and hard errors problems. From a software perspective, it will involve operating system support for address translation from virtual memory address to real PCM address. Another facet of this project considers the design and development of Deep Neural Networks (DNNs) at a chip level. We exploit the characteristics of flash memory cells to create an efficient DNN within a chip. This can be achieved by either leveraging the structure of NAND flash memory cell strings (with separate word-line control) or adopting the structure of NOR flash memory cells (with shared word-line control). REU students will encompass conducting comprehensive literature reviews, contributing to the implementation and evaluation of proposed methodologies, and playing a role in manuscript preparation and presentation development.

SMART WRITE: Adaptive Learning-Based Write Energy Optimization for Phase Change Memory

Background

Phase Change Memory (PCM) is a type of nonvolatile memory with in-place programmability. It contains a chalcogenide material (GST) that transitions between amorphous (0) and crystalline (1) states when heated to specific temperatures. PCM supports single and multi-level cell programmability.

Challenges

Limited lifetime, as the material degrades after repeated heating; Slow write speed; High energy consumption from write operations.

PCM offers advantages in highscalability, low power leakage, and low read latency. By mitigating the energy consumption of write operations and reducing latency, we can position PCM as the next phase in memory systems.

Methodologies

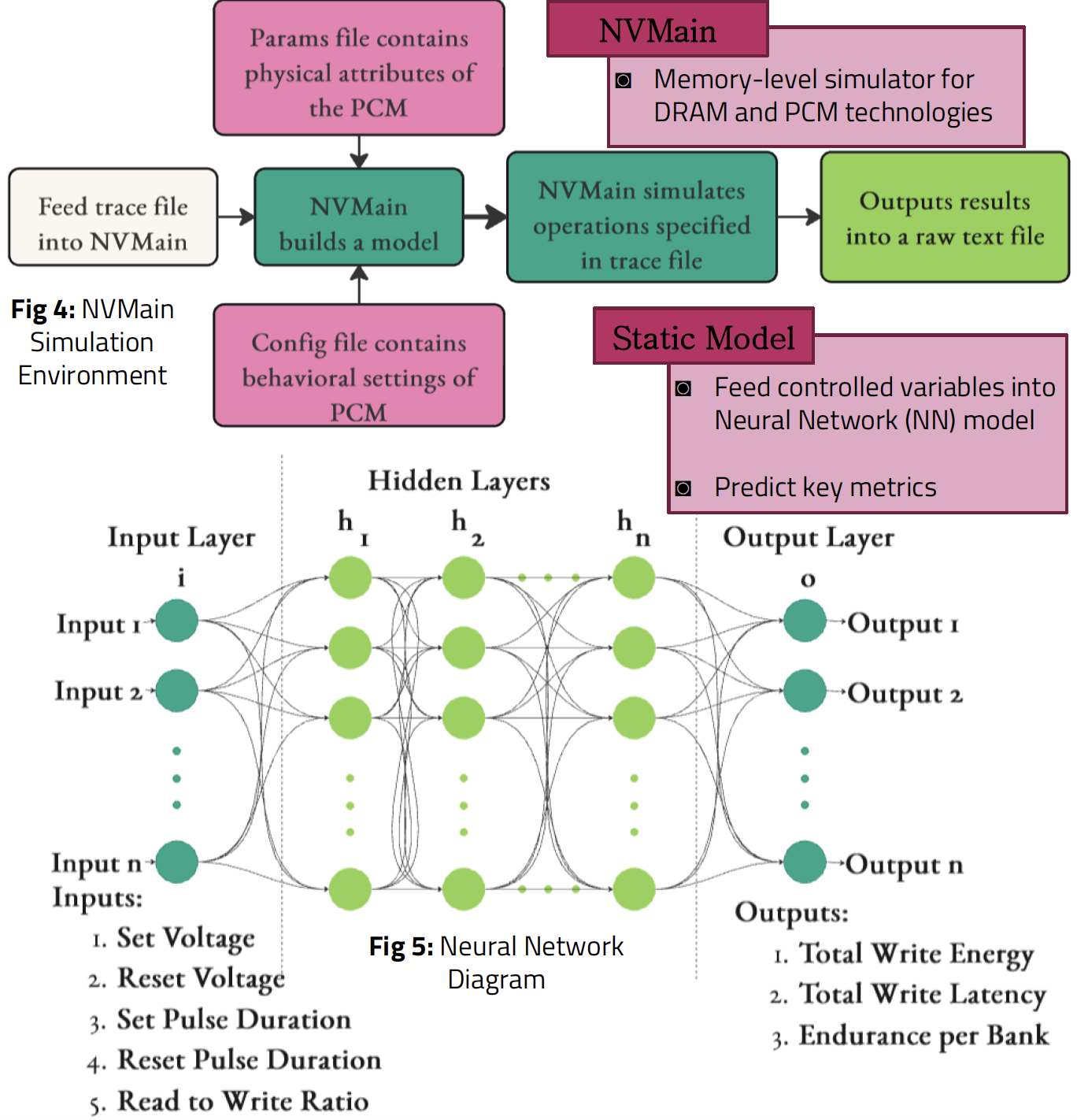

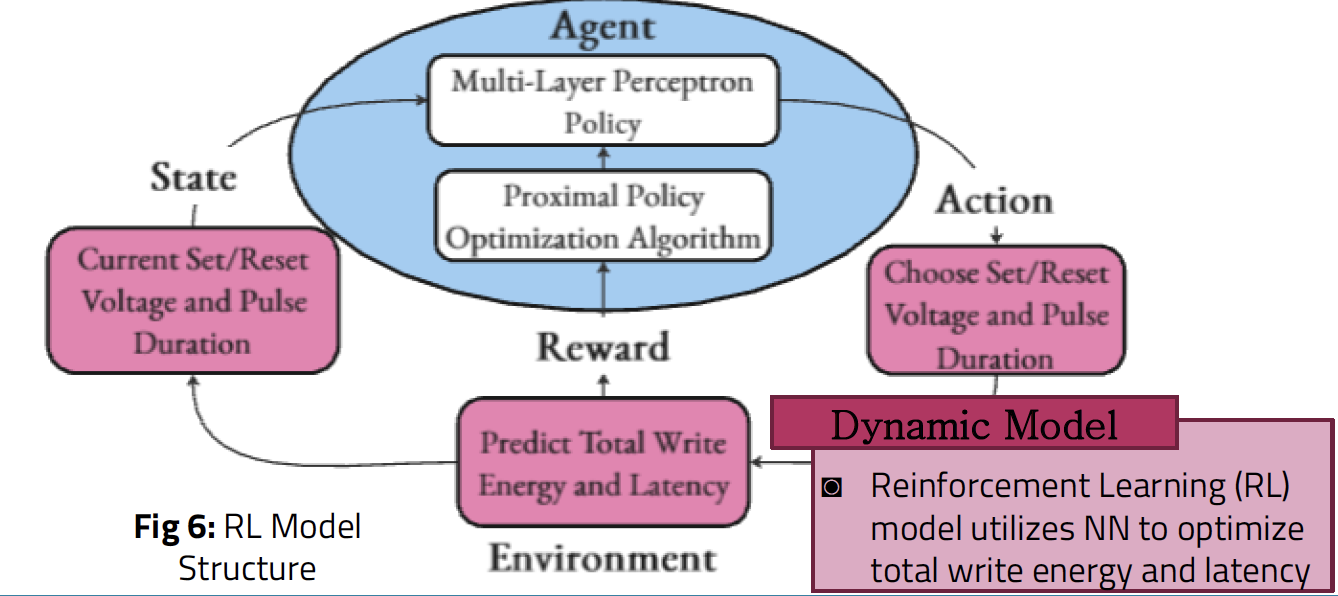

Reenforcement Learning

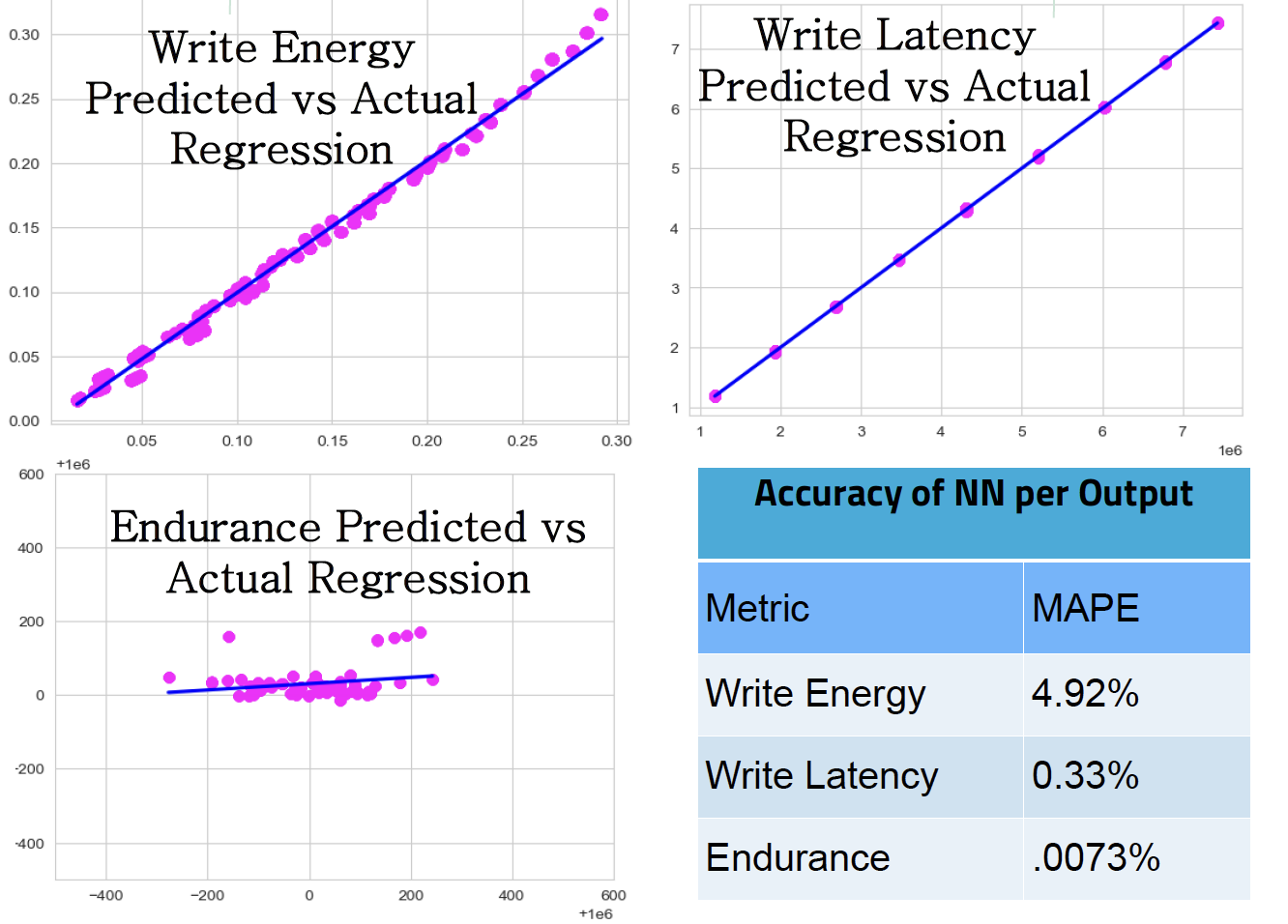

Static Model Evaluation

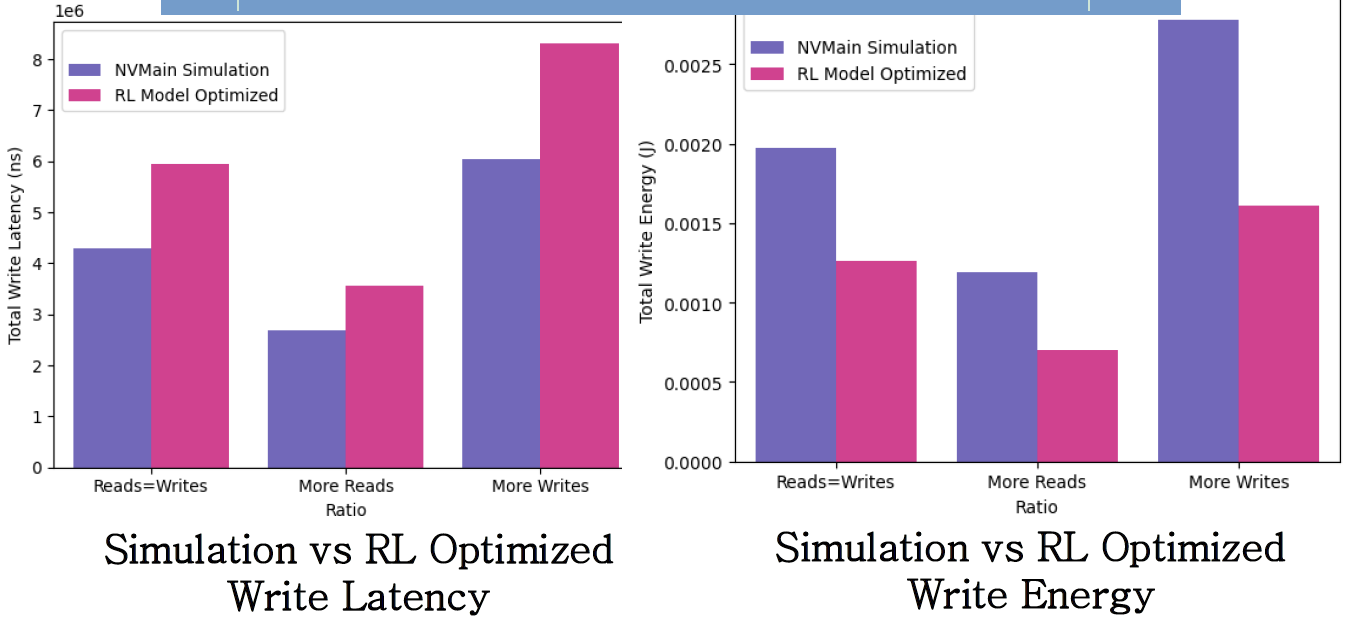

Dynamic Model Evaluation

Efficient Machine Learning-Based Error Prediction for Phase Change Memory

Background

Recent advancements in PCM include the development of multilevel-cell (MLC) operations, which allows for storing multiple bits per cell[2]. The state of PCM cells are changed through the application of heat to the individual cell. In single level cells (SLC), this involves one burst of heat, while multi level cells (MLC) have their state changed by multiple consecutive heat applications[3].

Motivation

In Phase Change Memory, the asymmetrical temperature required for SET( 0 to 1 ) and RESET ( 1 to 0 ) operations can cause Write Disturbance Errors (WDEs) where heat from a RESET operation leaks into adjacent ‘0’ cells, triggering an unintended SET operation[4]. Random bit flips are also a concern, stemming from factors like stochastic switching, resistance drift, and external influences such as thermal variation and radiation. Another PCM issue is endurance; cells have a lifespan of 107 - 108 cycles, resulting in ’Stuck-at’ faults when the cell dies. As PCM cells are engineered to be more densely packed, error rates increase. Yet, existing error methods cannot adequately scale to address these changes[j]. Our approach addresses these challenges by employing machine learning to predict errors, offering a dynamic solution to the challenging states of PCM cells.

Methodologies

Trace files were generated to simulate memory reads and writes in the simulator. Errors were injected into these trace files at different percentages according to Equations (1) and (2) with respect to the parameters in Table 1. Once errors were injected into the trace files, the files were run through the NVMain simulator with the following parameters. We used NVMain’s output to train two multioutput machine learning models — a sequential deep neural network and an AdaBoost regression model — to predict error percentages for Bit Flips and Write Disturbance Errors (WDEs). Next, we trained a convolutional neural network on those outputs to predict the number of errors per line, which will inform ECP placement which occur at the line granularity.

Results

Trained on the output parameters of the NVMain simulator, the neural network and AdaBoost model were able to accurately predict the percentage of both types of errors. The convolutional neural network was able to take these results and estimate the number of errors per line, informing the efficient use of standard error correction mechanisms. The effectiveness of this approach highlights the potential of machine learning-driven error prediction mechanisms to enhance the robustness and efficiency of MLC PCM systems.