Introduction

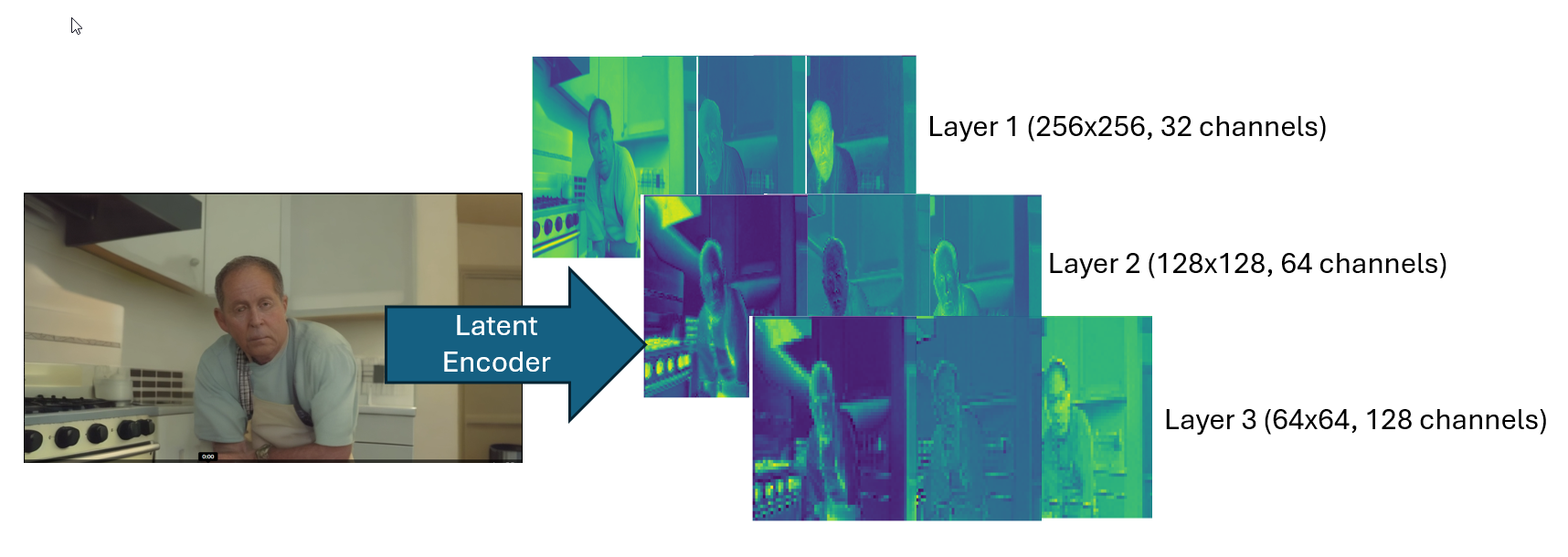

The rise of generative AI has enabled highly realistic text-to-video models, raising concerns about misinformation and its impact on social media, news, and digital communications. AI-generated videos can manipulate public opinion, influence elections, and create false narratives, making robust detection methods essential for maintaining trust. Our research into video generation models revealed that diffusion transformers operate on noisy latent spaces, inspiring our classifier’s architecture to analyze videos using the same structural units as generation models. This approach ensures adaptability to emerging AI techniques while maintaining high detection accuracy. Our explainable video classifier leverages deep learning, incorporating a convolutional encoder for latent representation, a patch vectorizer for feature extraction, and a transformer for final classification. Integrated Gradients (IG) provides transparency by highlighting the video elements that influenced the model’s decision, enabling human-interpretable explanations. We successfully developed an AI model to identify AI-generated content and classify videos accordingly. Our design was informed by a deep understanding of state-of-the-art generative models, ensuring alignment with their underlying mechanisms. In addition to achieving high accuracy, we validated the model’s ability to provide clear and interpretable explanations for its decisions.