Not that long ago, brain-computer interface (BCI)—harnessing brainwaves to control external devices remotely, with a computer serving as intermediary—was the stuff of science fiction. Today, however, thanks to advances in neuroscience, computer science and engineering, BCI is an established field of investigation, at the frontiers of research. And the promise it holds to augment human capabilities could restore vision, hearing and normal functionality to the physically impaired.

Not that long ago, brain-computer interface (BCI)—harnessing brainwaves to control external devices remotely, with a computer serving as intermediary—was the stuff of science fiction. Today, however, thanks to advances in neuroscience, computer science and engineering, BCI is an established field of investigation, at the frontiers of research. And the promise it holds to augment human capabilities could restore vision, hearing and normal functionality to the physically impaired.

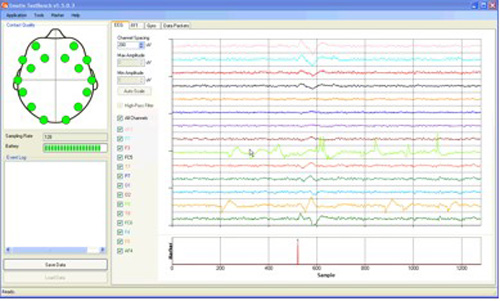

This past summer, the College of Engineering and Computer Science, in collaboration with Olive View–UCLA Medical Center, embarked on an ambitious BCI research project designed to make ambulation easier for people confined to wheelchairs. “We want to apply noninvasive BCI technology to wheelchair users who may be physically or cognitively challenged,” says C.T. Lin, professor of mechanical engineering, who is spearheading the effort. “We want to see how we can enhance their wheelchair mobility.”

Unlike prior research in this area, which relied on normally functioning people and open spaces for demonstrations of the technology, the CECS project is targeting people with physical impairments, with the goal of helping them ambulate in a dynamically changing environment. “If you have someone with poor vision or who has a spinal cord injury and may not be able to use their arms or hands, that will affect how much they will be able to see or whether they can make good decisions while moving the wheelchair around,” explains Lin. “It will be quite different from a normal user.”

The project team, consisting of three graduate students in mechanical engineering and four seniors representing mechanical engineering, electrical engineering and computer science, acquired a motorized wheelchair and are retrofitting it to accommodate the components. They have completed 80 percent of the mechanical design and are developing a motion cognition program capable of recognizing the wheelchair environment and determining the heading direction and opening of space in a room. Soon a user will be able to sit in the wheelchair and do nothing while a laptop computer drives it autonomously.

The project team, consisting of three graduate students in mechanical engineering and four seniors representing mechanical engineering, electrical engineering and computer science, acquired a motorized wheelchair and are retrofitting it to accommodate the components. They have completed 80 percent of the mechanical design and are developing a motion cognition program capable of recognizing the wheelchair environment and determining the heading direction and opening of space in a room. Soon a user will be able to sit in the wheelchair and do nothing while a laptop computer drives it autonomously.

Once this is accomplished, the next step will be to incorporate user brainwaves. At that point the unit will have two sets of motion commands, one created by the user and the other generated by a sensor-based analysis. The wheelchair can then run in autonomous mode, where the computer makes all the decisions, or in hybrid mode, where commands from the user are augmented by sensor analysis.

“If someone is normal, we can assume he or she is making good decisions, but if someone is challenged, we have to weigh commands between the two so the wheelchair won’t bump into a person, table or doorway,” Lin explains.

In carrying out the project, the team has an unusual advantage. One of its members is wheelchair-bound, and the group has also recruited a student volunteer who is paralyzed from the shoulders down to test the unit. They are expected to provide valuable comments on the design and insights into test-driving the smart wheelchair. The group is hoping to wrap up the project within a year and a half, delivering a fully intelligent wheelchair that will be assessed by the district office of the State Department of Rehabilitation. “That’s making this project as real as possible,” Lin says. “We would like to see it become a product available to users and eventually go into production.”

Subscribe to this post's comments feed